DirectX 11: A look at what's coming

It doesn't seem all that long ago when Microsoft first started talking about what DirectX 10 would bring to game development. In fact, it was less than two years ago when we described the new pipeline and since then we've had a couple of generations of hardware from both AMD and Nvidia.With the release of Windows Vista Service Pack 1, Microsoft introduced DirectX 10.1 – we covered this in our RV670 architectural analysis and so far only AMD has adopted the updated API. Nvidia claims that developers wanted other things—like GPU-accelerated game physics—and so it chose to focus on those features in the GT200 architecture.

Some would say it was stifling progression in graphics, while others believe it's the first major shift away from just focusing on just graphics in games. Whichever way you look at it, it was a little controversial given what happened to Assassin's Creed.

And so we arrive at DirectX 11, the next major update to Microsoft's fabled graphics API. Microsoft's DirectX architects haven't been resting on their laurels since DirectX 10's release – in fact, even before DirectX 10.1 shipped with Vista SP1, the company had already started work on what is now known as DirectX 11, but that's certainly not the end of it because developers we've spoken to have even made comments about what they need in DirectX 12!

Microsoft announced the new API at GameFest 2008 in Seattle but it's still a work in progress. Since then we've had the chance to listen to Kevin Gee of Microsoft outline the API at Nvision 2008, and then following that we were able to catch up with a number of developers in order to discuss the new API and its aims. Additionally, we've listened to what was discussed at GameFest as well, since Microsoft has conveniently made all of its presentations—and accompanying audio recordings—available for public consumption.

What follows is the result of all of this information gathering I've done ever since the original announcement was made in July. However, because it's still a work in progress, things may change between now and DirectX 11's RTM date.

Where we are now

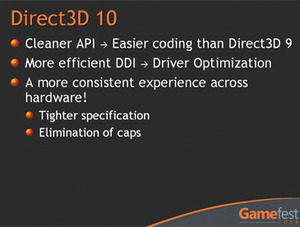

When DirectX 10 was released, Microsoft decided to wipe the slate clean and start afresh. What followed was a completely new driver model, which meant that compatibility with older Windows operating systems, like the incredibly popular Windows XP for example, was controversially cut out.The DirectX team's latest baby came to Vista, and Vista alone. This was undoubtedly a brave decision to make, but it was a necessary one in order to finally get rid of problems that have been around since DirectX first arrived on the scene in 1995.

Before DirectX 10 arrived, it's fair to say that Microsoft's API was becoming increasingly more difficult to code because of the optional features – instead, it just had to expose the capability to the API.

Exposing the capability but not being able to use the feature at all is about as useful as a chocolate teapot – the good thing is that with DirectX 10, Microsoft made everything in the specifications mandatory. It became an all-or-nothing affair, and that was great news for developers because they no longer had to worry about what hardware they were developing for and there was no longer a need for vendor-specific codepaths.

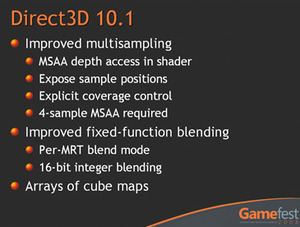

DirectX 10 wasn't without its problems though and DirectX 10.1 went some way to fixing some of the shortcomings – in fact, it was coined 'Complete D3D10' by Microsoft. I know above I'd just said that there were no optional features in DirectX 10, but in actual fact there were a few – FP32 filtering and 4xMSAA were now required features for DirectX 10.1 compliance, but since most DirectX 10 hardware supports both of these features already, that's not really where 10.1's biggest attraction is.

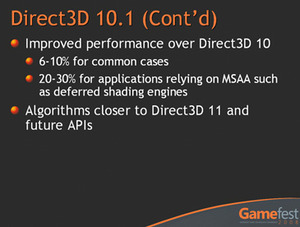

Instead, D3D10.1's biggest attraction according to the developers we've spoken to is the ability to use MSAA in conjunction with deferred rendering (as used in any game based on Unreal Engine 3, along with several others too) without needing to render the depth to a texture. Instead, with DirectX 10.1, you're able to read from the multi-sample depth buffer when there are multiple render targets – this can improve performance quite considerably (in the region of 20 to 30 percent), because the operation can be completed in just a single pass.

What I've found interesting is that although Nvidia has been incredibly cagey about what DirectX 10.1 features it supports, it did say that it was working with developers to expose multi-sample depth buffer reads on its current DirectX 10.0 compliant hardware. It's apparently one of the D3D10.1 features that Nvidia's hardware supports although it's unofficial because compliancy with Direct3D is now an all-or-nothing affair.

Overall then, specifications have got tighter and more stringent – that's a good thing from the developer's perspective and it sets us up for where DirectX 11 comes into play...

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.